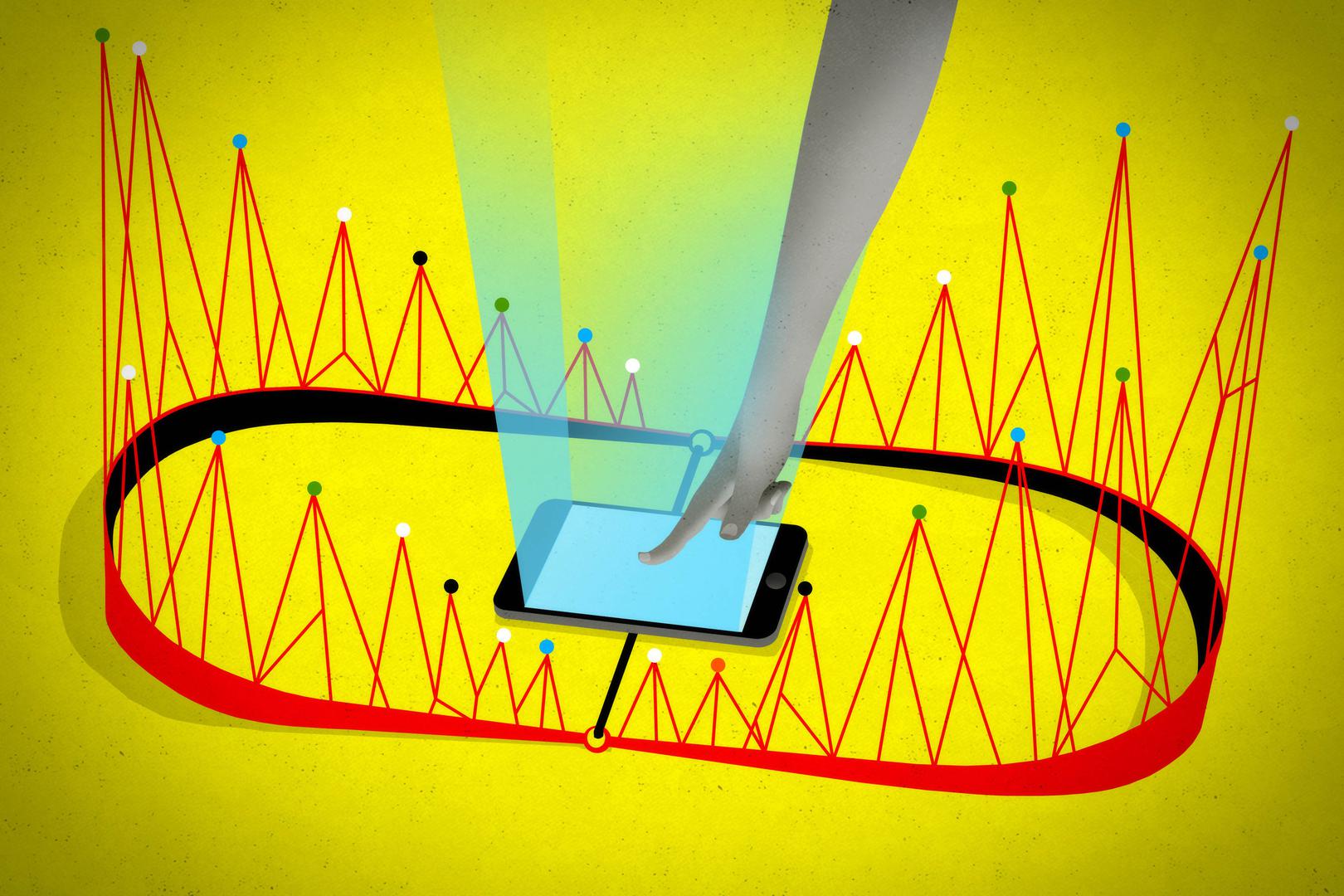

If Facebook were a country, it would be the largest in the world, with over 2 billion users. It would also be ruled by an opaque, unaccountable, and undemocratic regime. Social media has become the modern public square, which is run by unseen corporate algorithms that can manipulate our access to information and even our mood. Social media firms police our speech and behavior based on a set of byzantine rules where companies are judge and jury. And they track our every digital move across the Web and monetize the insights they glean from our data, often in unforeseen ways.

Though the internet has in many ways been a boon to the human rights movement, we have come a long way from the heady days of “Twitter and Facebook revolution” during the 2011 Arab uprising. Trust in Silicon Valley has sunk to an all-time low as the public begins to fully grasp the power we have traded away in exchange for access to seemingly free services.

Even more troubling is Facebook’s apparent retaliation against critics. A New York Times report in November 2018 raised questions about how far Facebook would go to undermine prominent critics like George Soros and the Freedom from Facebook coalition, including commissioning others to spread stories to generate public animus or to discredit them. In a statement released in response to the report, Facebook acknowledged that it had asked a public relations firm to research the possible motivations of critics, but did not ask them to distribute “fake news.” Human Rights Watch, which accepts no funds from any government, has received grants from Soros.

The inconvenient truth is that tech business models are often at the heart of the problem. Free, advertising-driven services are specifically designed to capture our attention, collect massive amounts of our data, create detailed profiles about us, and sell insights from those profiles to advertisers and other third parties.

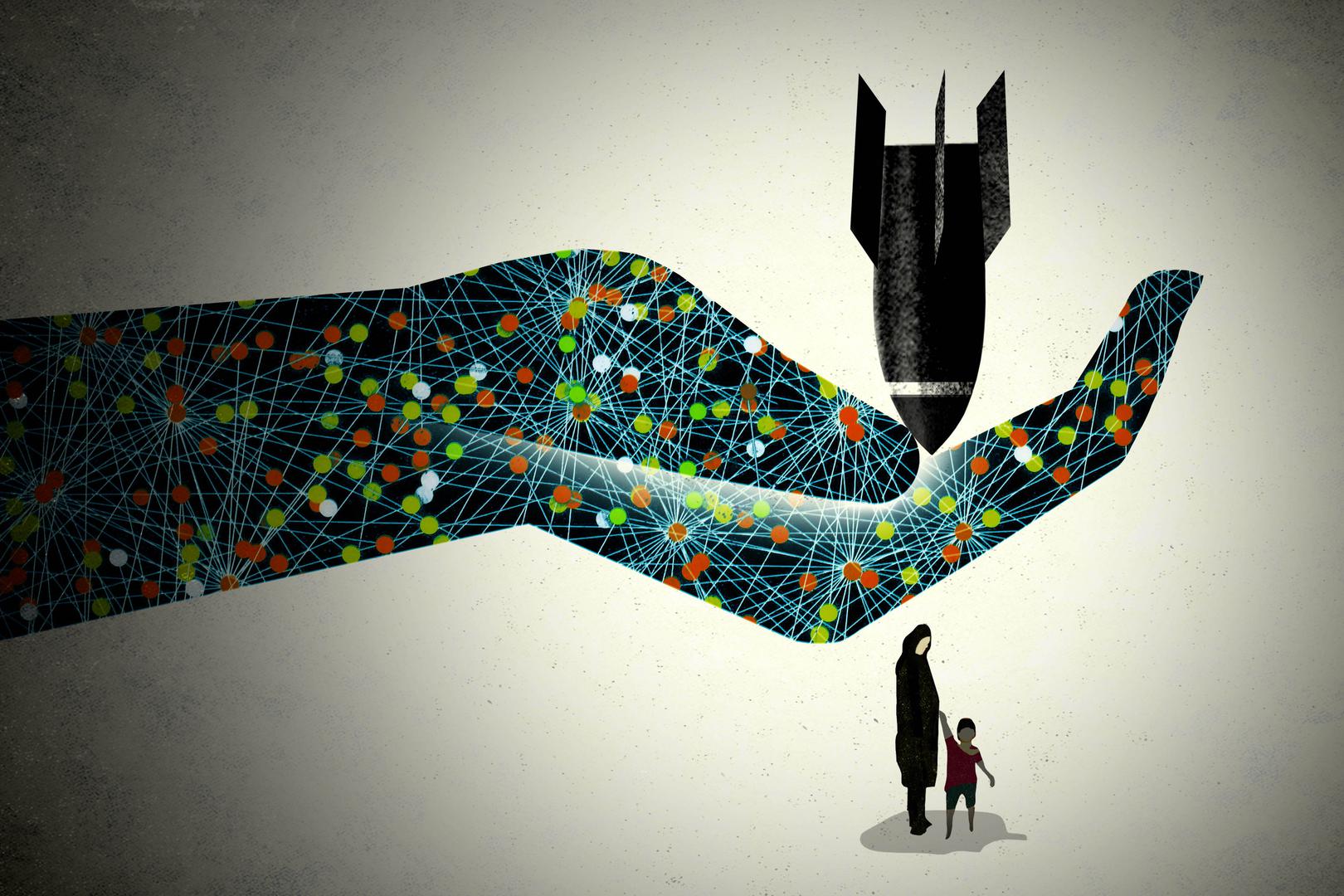

And how tech firms design their services can have serious repercussions for human rights. Governments, trolls, and extremists have weaponized social media to manipulate public opinion, spread hatred, harass marginalized communities, and incite violence. For example, United Nations investigators found that social media and hate speech had played a “determining role” in the campaign of ethnic cleansing against Rohingya Muslims in Burma by security forces. Governments in Russia and the Philippines have mobilized troll armies to spread disinformation and harass and target online critics. At the same time, massive data breaches and the Cambridge Analytica scandal, where a political consulting firm improperly gained access to the Facebook data of millions of users, have driven home just how little control we have over our data.

Some nascent efforts have begun to hold tech companies accountable for human rights harm. The European Union in 2016 passed the world’s most comprehensive data protection regulation, which went into effect in 2018. The law gives users more control over their data by regulating how companies collect, use, and share it. Other countries are looking to these rules as a model. More regulation may be needed to protect privacy or address other harms, though we should be careful that law doesn’t undermine freedom of expression or further entrench tech companies as arbiters of the public sphere.

To that end, the EU has brought anti-trust actions to address anti-competitive behavior, and Facebook has faced investigations and fines over its mishandling of data. Advocates in the US are exploring similar tactics to target anti-competitive behavior. Meanwhile, investors are pressing companies on their privacy practices and Facebook agreed to independent assessments to address racial discrimination and incitement to violence on its service. UN experts have called on companies to submit to other kinds of third-party human rights audits. Even tech workers have begun organizing to ensure the products they build aren’t put to harmful use.

These efforts are a good start, but may not go far enough in challenging underlying business models. Social media algorithms are engineered to maximize “user engagement”—our clicks, likes, and shares—which gets us to provide more data that generates more advertising revenue. But in maximizing engagement, companies may also be amplifying societal outrage and polarization. Algorithms may reward the most inflammatory and partisan content that people are more likely to like and share.

Advertising systems enable businesses to micro-target ads on social media to specific demographics, but also allow governments and trolls to manipulate the public. To meet revenue goals, social media firms must also continuously add users. But they have sometimes rushed to capture markets without fully understanding the societies and political environments in which they operate, with devastating effects to already vulnerable groups. Or, in places like China, governments may require companies to sacrifice users’ free expression and privacy as a cost of entry.

The original sin may be the “free” advertising-driven business model that allowed social media, email, search, and other services to grow into huge, dominant networks. That model is also a significant barrier to addressing digitally mediated human rights harm, from unchecked data collection to gaming of social media algorithms.

If regulators, investors, and users want true accountability, they should press for a far more radical re-examination of tech sector business models, especially social media and advertising ecosystems. And if tech companies are serious about rebuilding user trust and safeguarding our rights, they need to reckon with the harm their technology and very business models can cause.